Advertisement|Remove ads.

Sam Altman Points Fingers At Tesla Autopilot, Grok Issues After Elon Musk Warns Against ChatGPT Use Over Safety

- Altman said ChatGPT faces conflicting criticism and stressed the need to protect vulnerable users.

- The exchange comes amid continued scrutiny over chatbot safety and mental health use.

- Altman cited Tesla Autopilot deaths and referenced Grok-related image issues under regulatory review.

OpenAI CEO Sam Altman pushed back after Tesla’s Elon Musk warned users not to let their loved ones use ChatGPT, sparking a public spat over AI safety, mental health risks, and platform responsibility.

Altman Defends ChatGPT Amid Musk’s Criticism

Musk wrote on X, “Don’t let your loved ones use ChatGPT,” after crypto-focused influencer DogeDesigner claimed the chatbot had been linked to nine deaths, including five alleged suicides involving teens and adults.

Altman responded, saying criticism of ChatGPT often cuts both ways. “Sometimes you complain about ChatGPT being too restrictive, and then in cases like this, you claim it’s too relaxed,” Altman wrote on X. “Almost a billion people use it, and some of them may be in very fragile mental states.”

“We will continue to do our best to get this right, and we feel [a] huge responsibility to do the best we can, but these are tragic and complicated situations that deserve to be treated with respect,” Altman said.

Altman also drew a comparison to Tesla’s driver-assistance systems, saying that more than 50 people have reportedly died in crashes linked to Autopilot. He said the only time he rode in a car using it, he felt it was “far from a safe thing for Tesla to have released.”

Altman added that he would not “even start on some of the Grok decisions,” referring to the recent surge of AI-generated nonconsensual explicit images produced through Grok on X, which has prompted regulatory probes in Europe, India, and Malaysia.

“You take ‘every accusation is a confession’ so far,” Altman wrote.

The longstanding beef between the two entrepreneurs has been quite public. Musk sued OpenAI last year over its plans to convert into a public benefit corporation, arguing that the company had strayed from its founding agreement by prioritizing profit over the broader benefit of humanity. Interestingly, he co-founded OpenAI with Altman in 2015, when it was launched as a research lab, but left the board in 2018. Since then, he has been a blunt critic of the company.

Lawsuit Put ChatGPT Safety Under Scrutiny

The announcement comes amid heightened scrutiny of AI chatbots over lawsuits and media reports over their potential role in mental health crises.

The parents of a 16-year-old boy sued OpenAI in August 2025 for wrongful death, saying ChatGPT aided their son’s suicide. They claimed their son became reliant on the chatbot for communication in the weeks leading up to his death, according to a report by NBC News.

The teen’s family alleged safeguards meant to trigger during emergencies did not work. The family’s lawsuit claimed wrongful death, design defects, and failure to warn. OpenAI maintained that ChatGPT directs users to crisis hotlines and other resources outside of the app. The case joins a growing number of wrongful death lawsuits filed against OpenAI in recent months.

The company has noted that safeguards can degrade over longer conversations, and said it’s working to improve safety tools for teens. Last year, OpenAI detailed plans to better respond to crises, including expanding safety measures for long conversations.

Tesla Safety Back In Focus

Altman’s comments also come amid continued scrutiny of Tesla’s safety record. Public databases of Tesla-related deaths include scores of crashes in which Autopilot or other driver-assistance technologies were reported to have been engaged moments before impact, according to data from U.S. regulators, court filings, and news reports.

Tesla has also been investigated by U.S. and foreign regulators over emergency door release systems. Regulators have looked at whether Tesla’s door controls are visible and easy to find in an emergency. Regulators in Europe have raised similar questions, and China reportedly mulled restrictions on fully retractable door handles.

How Did Stocktwits Users React?

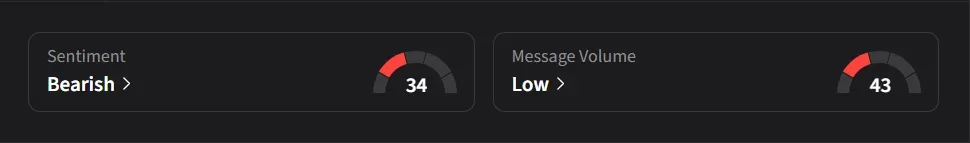

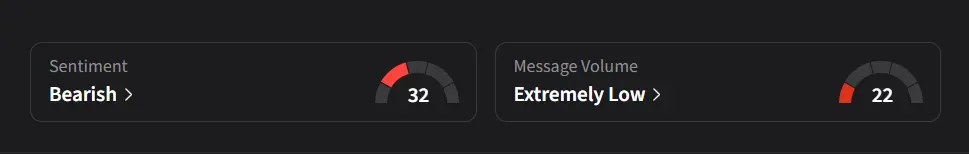

On Stocktwits, retail sentiment toward Tesla and OpenAI was ‘bearish’, with message volume being ‘low’ for TSLA and ‘extremely low’ for OpenAI.

Tesla’s stock has declined nearly 7% so far this year, trailing the benchmark S&P 500 and Nasdaq indices.

For updates and corrections, email newsroom[at]stocktwits[dot]com.

/filters:format(webp)https://news.stocktwits-cdn.com/large_trump_jpg_fc59d30bbe.webp)

/filters:format(webp)https://st-everywhere-cms-prod.s3.us-east-1.amazonaws.com/Prabhjote_DP_67623a9828.jpg)

/filters:format(webp)https://st-everywhere-cms-prod.s3.us-east-1.amazonaws.com/Getty_Images_2185805420_fotor_2025011795638_6fbb0bb63f.jpg)

/filters:format(webp)https://news.stocktwits-cdn.com/IMG_4530_jpeg_a09abb56e6.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/large_us_stocks_jpg_64b4ea4fc0.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/IMG_8805_JPG_6768aaedc3.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/large_Getty_Images_2207049226_jpg_7f1e685123.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/large_us_stocks_war_jpg_f2a208ae56.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/large_M_and_A_deals_acquisitions_resized_jpg_a56d5b5e28.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/jaiveer_jpg_280ad67f36.webp)