Advertisement|Remove ads.

Why Gavin Baker Thinks Nvidia’s Groq Deal Is Bad News For Many AI Chip Firms

- Gavin Baker said Nvidia’s Groq licensing deal raises competitive pressure on standalone AI ASIC developers.

- Baker said inference is splitting into prefill and decode, with Nvidia using Rubin CPX, Rubin, and a Groq-derived SRAM variant for different workloads.

- Baker said customers are now willing to pay for SRAM speed, increasing his confidence that most AI ASICs will be canceled except TPU, AI5, and Trainium.

Gavin Baker, managing partner at Atreides Management, said Nvidia’s decision to license technology from AI startup Groq points to mounting pressure on independent artificial intelligence (AI) chip firms and reinforces his view that the sector is moving toward consolidation.

The stock rose 1.2% to $190.89 at the time of writing.

Baker Flags Competitive Pressure

Baker said in a post on X that Nvidia’s approach of combining multiple architectures raises the competitive bar for standalone AI application-specific integrated circuit (ASIC) developers.

The comments came after Nvidia disclosed a licensing arrangement with Groq. Nvidia said it has agreed to a licensing deal that gives it the right to integrate Groq’s chip design into future products, while Groq will continue operating as an independent company. Some of Groq’s senior executives will join Nvidia to help scale the licensed technology. Financial terms were not disclosed.

Baker Details Prefill And Decode Workloads

Baker said inference workloads are increasingly disaggregating into prefill and decode stages, with each phase favoring different hardware characteristics. He said prefill workloads reward memory capacity, while decode workloads depend on extremely high memory bandwidth and low latency.

He said Nvidia’s Rubin CPX, Rubin, and a Groq-derived static random-access memory (SRAM) variant can be used together, with Rubin CPX optimized for massive context windows during prefill, Rubin used for training and high-density, batched inference workloads, and the Groq-derived variant optimized for ultra-low-latency, agentic reasoning inference workloads.

SRAM Performance Gains Are Now Clear

Baker said SRAM-based architectures can deliver token-per-second performance far exceeding graphics processing units (GPUs), tensor processing units (TPUs), or other ASICs, even though they come at a higher cost per token due to smaller batch sizes. He said recent results from Groq and Cerebras show customers are willing to pay for speed and low latency, resolving a key uncertainty from roughly 18 months ago.

Based on that shift, Baker said his confidence has increased that all application-specific integrated circuits will eventually be canceled except TPU, AI5, and Trainium.

Baker said Groq’s many-chip rack architecture was much easier to integrate with Nvidia’s networking stack and potentially within a single rack, while Cerebras’s wafer-scale engine almost requires an independent rack.

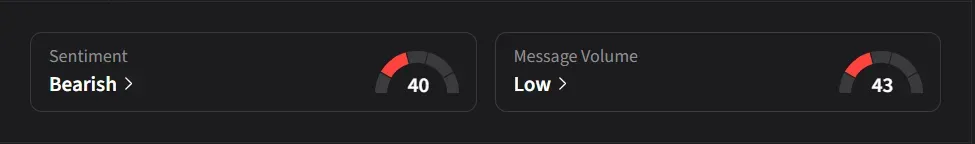

How Did Stocktwits Users React?

On Stocktwits, retail sentiment for Nvidia was ‘bearish’ amid ‘low’ message volume.

One user expects the stock to “go to all time high” before the end of the year.

Another user said, “they [Nvidia] were already laps ahead of their competition. This deal not only secured that lead but extended it. Perfect synergy with Groq”

Nvidia’s stock has risen 42% so far in 2025.

For updates and corrections, email newsroom[at]stocktwits[dot]com.

/filters:format(webp)https://news.stocktwits-cdn.com/large_michael_saylor_strategy_2013_resized_jpg_e358c15fd4.webp)

/filters:format(webp)https://st-everywhere-cms-prod.s3.us-east-1.amazonaws.com/Prabhjote_DP_67623a9828.jpg)

/filters:format(webp)https://news.stocktwits-cdn.com/large_Getty_Images_1157193929_jpg_57df32610c.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/IMG_4530_jpeg_a09abb56e6.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/large_patrick_soon_shiong_jpg_32b8924ac2.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/IMG_8805_JPG_6768aaedc3.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/large_bitcoin_2026_2_jpg_a7bbca2bde.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/large_Getty_Images_2261448901_jpg_dec7c2c9b9.webp)

/filters:format(webp)https://st-everywhere-cms-prod.s3.us-east-1.amazonaws.com/IMG_9209_1_d9c1acde92.jpeg)

/filters:format(webp)https://news.stocktwits-cdn.com/large_us_stocks_war_jpg_f2a208ae56.webp)