Advertisement|Remove ads.

Advantage Micron? SK Hynix Sees HBM Chip Sales Growing By 30% Every Year Through 2030 On ‘Very Firm And Strong’ AI Demand

As the artificial intelligence (AI) revolution takes a firm grip worldwide, the demand for memory chips that power the technology is expected to surge in the coming years.

A senior executive at South Korean memory chipmaker SK Hynix stated in an interview with Reuters that the company anticipates a 30% annual increase in high-bandwidth memory (HBM) chip demand until 2030.

In dollar terms, the executive anticipates the market for custom HBM to reach tens of billions of dollars by 2030. Custom HBM chips include a customer-specific base die that helps manage memory, making it impossible to replace a rivals’ memory products.

HBM chips are dynamic random access memory (DRAM) chips that provide a wider data channel, lower latency, and reduced power consumption, which are critical for high-performance computing, particularly in AI and machine learning applications.

In HBM chips, DRAM dies are stacked vertically by connecting using through-silicon vias (TSV).

Significant players in the HBM chip market are Micron Technology (MU), Samsung, SK Hynix, Intel and Fujitsu, according to Mordor Intelligence. Micron stock has gained about 42% year-to-date compared to the more modest 13% gain for the iShares Semiconductor Index (SOXX).

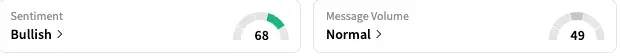

On Stocktwits, retail sentiment toward Micron stock remained 'bullish' by late Sunday, while the message volume was 'normal.'

Choi Joon-yong, the head of HBM business planning at SK Hynix, told Reuters that “AI demand from the end user is pretty much, very firm and strong.”

According to the executive, the relationship between AI build-outs and HBM purchases is "very straightforward," and there is a correlation between the two. He called Hynix's projections conservative, given that it incorporated constraints such as available energy.

SK Hynix, a supplier to Nvidia (NVDA) and Apple (AAPL), reported strong quarterly results in late July, attributing the strength to the aggressive investments by global big tech companies in AI, which led to a steady increase in demand for AI memory.

The next-generation HBM chips, dubbed HBM4, are expected to be launched in the second half of 2025 or 2026, with substantial revenue anticipated in 2027.

A Bloomberg Intelligence report stated in January that the HBM chip market is expected to grow to $130 billion by the end of 2030, up from $4 billion in 2023.

For updates and corrections, email newsroom[at]stocktwits[dot]com.

/filters:format(webp)https://news.stocktwits-cdn.com/large_Getty_Images_2262920033_jpg_f596c67fd3.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/jaiveer_jpg_280ad67f36.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/Getty_Images_2231498932_jpg_bdd44fc548.webp)

/filters:format(webp)https://st-everywhere-cms-prod.s3.us-east-1.amazonaws.com/unnamed_jpg_9dff551b50.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/Getty_Images_2233516954_jpg_72241a7246.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/Aashika_Suresh_Profile_Picture_jpg_2acd6f446c.webp)

/filters:format(webp)https://st-everywhere-cms-prod.s3.us-east-1.amazonaws.com/large_AEHR_chip_maker_3698bf2343.jpg)

/filters:format(webp)https://news.stocktwits-cdn.com/large_Trending_stock_resized_may_jpg_bc23339ae7.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/vivekkrishnanphotography_58_jpg_0e45f66a62.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/large_Getty_Images_2205870269_jpg_b38339787f.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/shivani_photo_jpg_dd6e01afa4.webp)