Advertisement|Remove ads.

Does Nvidia’s Groq Licensing Mega-Deal Expose A Quiet Weak Spot In Its AI Chip Empire?

- The Groq deal underscores Nvidia’s push to strengthen its position in AI inference, a faster-growing, more recurring-revenue segment than model training.

- Licensing Groq’s LPU technology and hiring its leadership helps Nvidia defend against rising threats from AMD and Google’s TPUs.

- It may also broaden its hardware portfolio beyond premium GPUs.

Just as whispers grew louder about Nvidia’s iron grip on the AI chip market beginning to loosen, CEO Jensen Huang reached for an unexpected lever. In a late-year move that caught many off guard, Nvidia struck a mega non-exclusive licensing agreement with AI startup Groq — the company behind the custom-built Language Processing Unit (LPU).

On the surface, it looks like a routine partnership. Look closer, and it may signal something far more consequential: a quiet recalibration of Nvidia’s strategy at a moment when competition in AI hardware is intensifying, new chip architectures are gaining attention, and customers are increasingly sensitive to cost, power efficiency, and supply constraints.

The $20 billion price Nvidia reportedly will pay for bringing on board Groq’s senior management team, including co-founder Jonathan Ross and President Sunny Madra, is widely seen as a coup of sorts. On the other hand, it could also mean a rich payday for Groq’s management team, as the company was valued at $6.9 billion in its latest funding round, completed in September.

Plugging A Hole?

What makes LPUs distinctive is their singular focus on inference—the stage at which a trained machine-learning model draws conclusions from new data. Nvidia, by contrast, is widely seen as lagging Advanced Micro Devices (AMD) in AI inferencing chips, a segment many experts believe offers greater long-term potential than AI training hardware.

Training, which involves teaching large-language models (LLMs) with large datasets, is a one-time expense, whereas inference generates a recurring revenue stream.

Source: IDTechEx via Signal Integrity Journal (Jan. 2024 report)

Meanwhile, the high cost of Nvidia’s AI accelerators has begun to act as a deterrent, particularly as its near-monopoly erodes and rival offerings gain traction. Alphabet, Inc.’s (GOOGL) (GOOG) in-house AI accelerators, known as Tensor Processing Unit (TPUs), are reportedly 45% cheaper than Nvidia’s H100, according to A.I. News Hub. Adding a less-expensive option to its arsenal could help Nvidia to become cost-competitive.

Bringing in Ross is also an attempt to stave off the Google challenge, as reports from November stated that the Sundar Pichai-led company, which had long used TPUs developed using its TensorFlow software for in-house purposes, will begin selling them to Meta and other third-party firms. Ross’ LinkedIn profile shows that before founding Groq, he worked at Google for nearly 5 years and led TPU efforts there.

Commenting on the Groq deal, Baird analyst Tristan Gerra said:

“This announcement is in line with Nvidia's push into AI inferencing.”

The analyst sees Nvidia’s vast lead in software libraries could be significantly accretive to Groq platform.

Can Nvidia Keep A Firm Grip?

Citing AI supply chain chatter, Baird analyst Gerra said Nvidia will likely retain 70% of the AI chip market via its GPUs by 2030, while Google could claim a majority of the remaining 30% via its TPUs. The analyst expects competitors to continue struggling with their own custom ASIC (application-specific integrated circuit) designs. If Google is successful in porting the software stack to customer platforms, its TPU share could exceed 20% by 2030, he added.

Weighing in on the vantage points for both companies, Gerra said volumes of the Alphabet’s own workloads help with its ASIC design, and Nvidia's yearly cadence of new product platforms continues to apply tremendous pressure on the competition.

“Net, while we believe GPUs will retain the majority of the AI processor market by 2030, custom ASIC could be accretive to Nvidia's TAM over time.”

What Retail Feels About Nvidia Stock

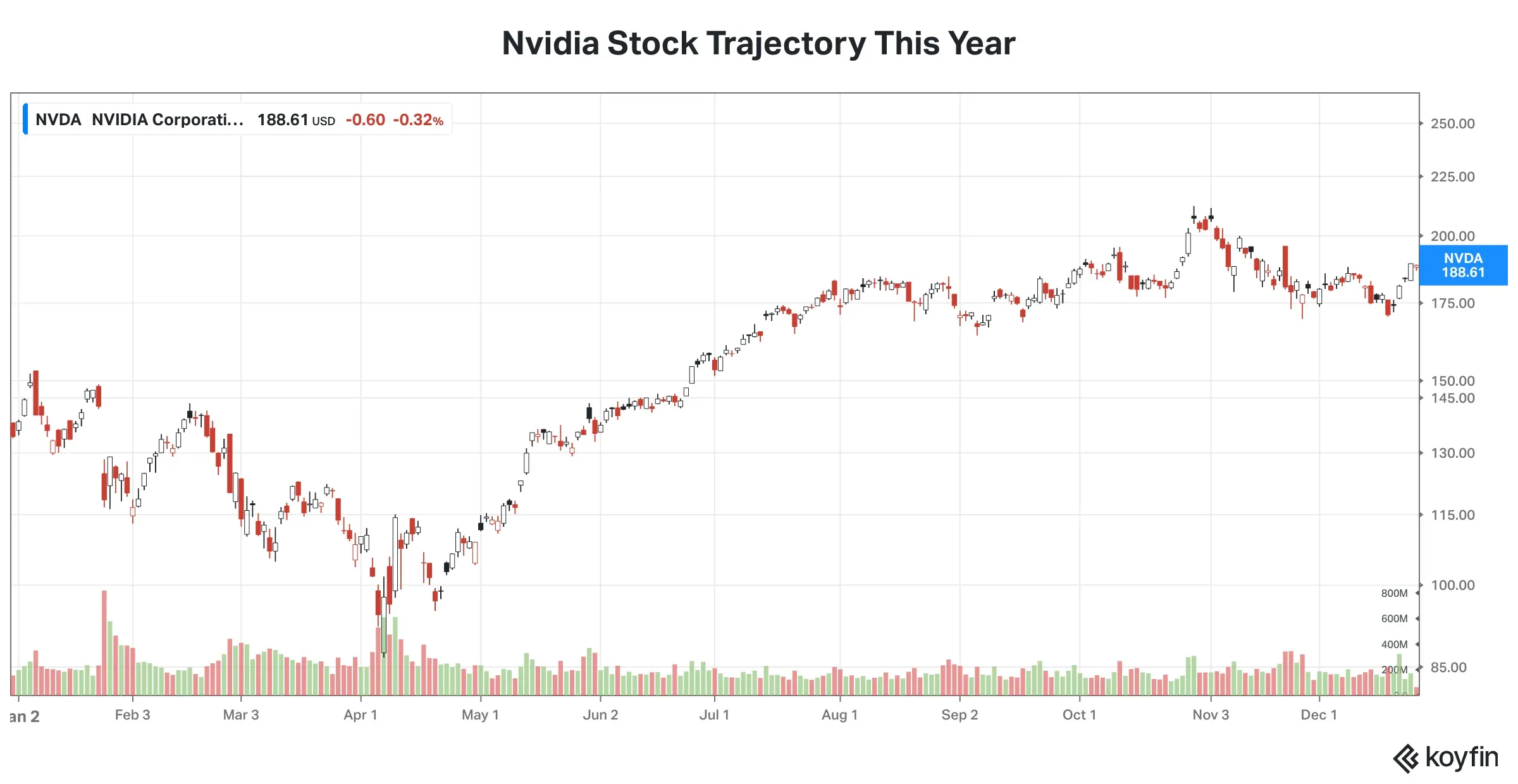

Nvidia’s stock, though up a robust 38% this year, has pulled back more than 12% from the year’s peak of $212.19, reached on Oct. 29.

Source: Koyfin

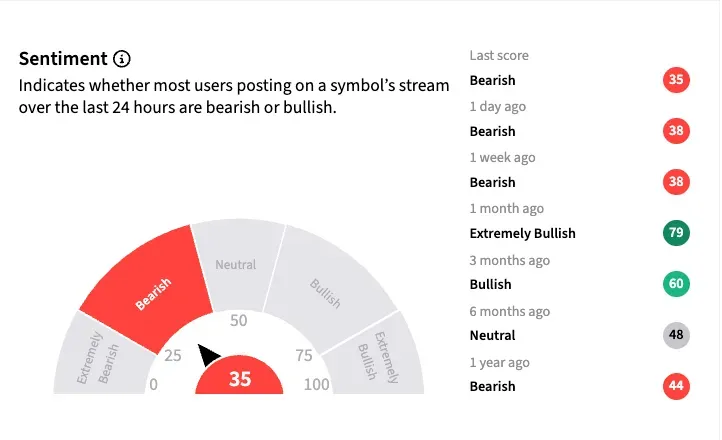

The Groq deal hasn’t worked any immediate magic for Nvidia among retail traders. Stocktwits sentiment toward NVDA remained “bearish” as of early Friday, though the timing — just after Christmas — likely reflects subdued trading activity.

Retail users of the platform, identifying as ‘bearish,’ shared a post on the anonymous professional community Blind, supposedly by an Nvidia employee, who called Groq’s LPUs “bad architecture” and stated that they are nothing but very long instruction word (Vliw) architecture with static random access memory (SRAM). They saw the deal as a bailout for people close to President Donald Trump, such as Chamath Palihapitiya, as a “quid pro quo” for access to China. Palihapitiya made a seed investment in Groq in 2018, paying about $53 million for a 20% stake.

Nvidia’s Groq deal is the largest ever that the chipmaker has made, and the structure of the agreement also helps the company avoid antitrust concerns. It is reminiscent of Meta’s deal to take a 49% stake in ScaleAI for $15 billion, which allowed the company to hire its founder, Alexander Wong. Wong has since gone on to head Meta’s Super Intelligence Group.

Whether Huang’s newest gamble ultimately pays off will determine if Nvidia can extend its dominance in the AI chip market, or if it marks the beginning of a more competitive, margin-pressured era for the company.

For updates and corrections, email newsroom[at]stocktwits[dot]com.

Read Next: Psychedelics Break Out Of The Fringe Under RFK Jr’s 2025 Reset — But Did MNMD Or CMPS Own The Year?

/filters:format(webp)https://news.stocktwits-cdn.com/large_Getty_Images_1463539842_jpg_bcfa58ea0b.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/IMG_4530_jpeg_a09abb56e6.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/large_trump_jpg_fc59d30bbe.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/IMG_8805_JPG_6768aaedc3.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/large_lucid_stock_jpg_167f2bc3dd.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/large_So_Fi_new_6d7889a863.webp)

/filters:format(webp)https://st-everywhere-cms-prod.s3.us-east-1.amazonaws.com/large_ibm_signage_resized_1a6adb0393.jpg)

/filters:format(webp)https://st-everywhere-cms-prod.s3.us-east-1.amazonaws.com/IMG_9209_1_d9c1acde92.jpeg)

/filters:format(webp)https://news.stocktwits-cdn.com/Getty_Images_2203832195_jpg_d80f13d1c7.webp)