Advertisement|Remove ads.

Nvidia Launches Highly Anticipated Blackwell Successor: Here’s What Its New Rubin AI Chips Can Do

- Nvidia announced Vera Rubin at CES, the company’s latest data center system meant for AI workloads.

- Nvidia is said to have announced the chips earlier than usual.

- Shares of the chip designer were flat in Monday’s after-market session.

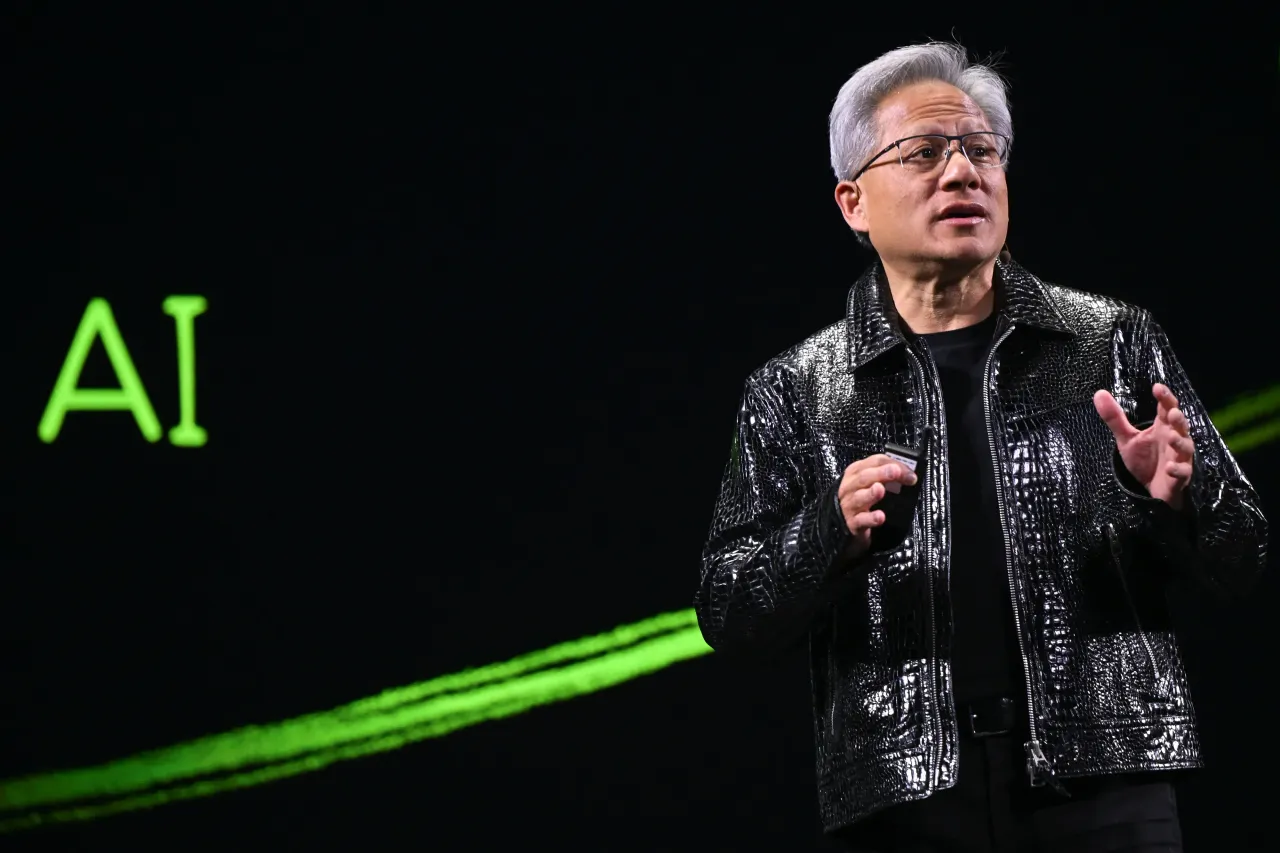

Nvidia on Monday officially announced its next-generation AI chips, Vera Rubin, a successor to its paradigm-shifting Blackwell architecture, which would go on sale in the second half of the year.

Named after midcentury astronomer Vera Florence Cooper Rubin, Nvidia’s Rubin architecture comprises six chips designed to be used together as a single system. The company touted that the system is five times faster than Blackwell and delivers a 10-fold reduction in inference cost, while also addressing certain storage and interconnection issues with the previous-generation chips.

“Vera Rubin is designed to address this fundamental challenge that we have: The amount of computation necessary for AI is skyrocketing.” Nvidia CEO Jensen Huang said at a keynote at CES 2026. “Today, I can tell you that Vera Rubin is in full production.”

Faster AI Hardware Cycle

The notable development is that the unveiling came ahead of schedule – Nvidia typically details the specs and capabilities of its latest chips at its spring developer conference – seen as a response to the swift pace of AI development and the burgeoning demand for consumer and enterprise AI applications.

Market Reaction

Nvidia shares were flat in the after-market session on Monday, after dropping 0.4% in the regular session, indicating that the latest offering did not entirely captivate investors. On Stocktwits, retail sentiment for NVDA shifted to ‘bullish’ from ‘bearish,’ with upbeat member commentary on Nvidia’s CES announcements.

In response to the news, Tesla and xAI founder Elon Musk said on X: “It will take another 9 months or so before the hardware is operational at scale and the software works well.”

Rubin Capabilities

The Rubin system will replace Blackwell – and Hopper and Lovelace before that – architectures that have helped make Nvidia one of the world’s most valuable companies.

The Rubin chips are likely to be used by every major cloud provider, including high-profile Nvidia partnerships with Anthropic, OpenAI, and Amazon Web Services. They will also be used in HPE’s Blue Lion supercomputer and the upcoming Doudna supercomputer at Lawrence Berkeley National Lab, Nvidia had previously disclosed.

The biggest draw of Vera Rubin is its dramatic reductions in inference and training costs compared to Blackwell. After China’s DeepSeek demonstrated last year that benchmark AI models can be built at a fraction of the price – and as executives and investors grow uneasy about soaring data-center spending – AI companies are scrambling to rein in the cost of developing their models.

Nvidia’s stock gained nearly 40% in 2025.

For updates and corrections, email newsroom[at]stocktwits[dot]com.

/filters:format(webp)https://news.stocktwits-cdn.com/Getty_Images_2239438553_jpg_ae3a87d29d.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/shivani_photo_jpg_dd6e01afa4.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/Getty_Images_2213723142_jpg_541132d7bf.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/large_genuine_parts_jpg_4b3a009be9.webp)

/filters:format(webp)https://st-everywhere-cms-prod.s3.us-east-1.amazonaws.com/Rounak_Author_Image_7607005b05.png)

/filters:format(webp)https://news.stocktwits-cdn.com/large_Trending_stock_resized_may_jpg_bc23339ae7.webp)

/filters:format(webp)https://news.stocktwits-cdn.com/large_logal_paul_OG_jpg_3c5ff1734b.webp)

/filters:format(webp)https://st-everywhere-cms-prod.s3.us-east-1.amazonaws.com/Prabhjote_DP_67623a9828.jpg)

/filters:format(webp)https://news.stocktwits-cdn.com/large_Bitcoin_Red_OG_jpg_d64521f99a.webp)